Camera as a sensor

Horizon tracking and constructing a virtual gyro.

I did a presentation the other week where I described a robust method for horizon tracking, deriving a virtual gyro from camera footage, did a tie into the mars helicopter navigation system, and used all these techniques as a truth reference for our lab ins/gns EKF. Our lab EKF estimates roll, pitch, and yaw without being able to directly sense them. But with a camera we can quantify and validate the performance of our ekf against a “truth” reference.

As a bonus, I pull all the diverse elements together and use them as a visualization tool for our UAS flight controller and ekf. I show a visual proof of horizon truth, augmented reality, and autonomous landing.

Slides

Here is a link to the: presentation slides

Source Code

Open-source/python tools on github.

Horizon tracking

Feature tracking with Airframe (self) masking

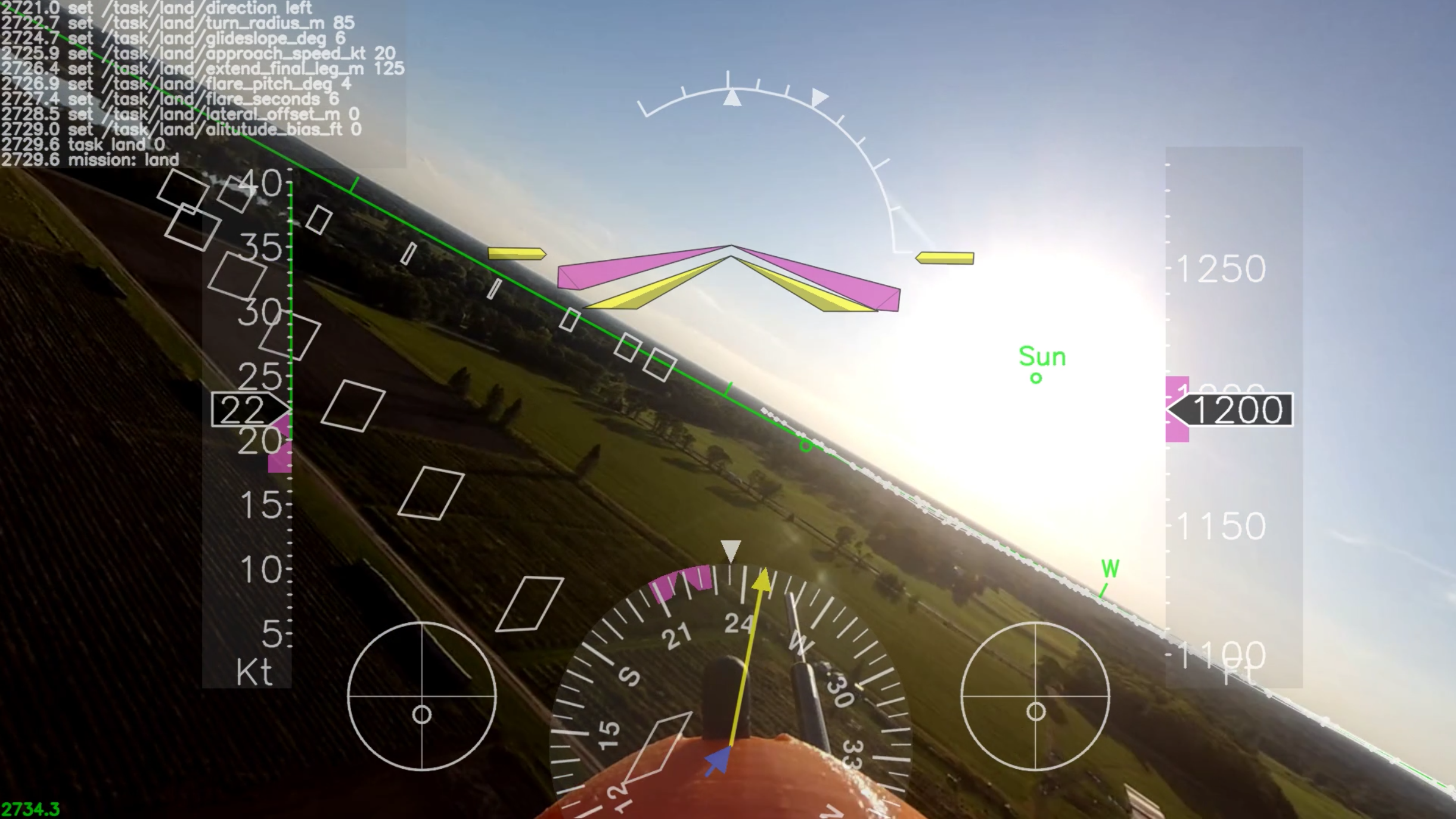

Full visualization

Shows conformal and non-conformal HUD elements. Demonstrates use of augmented reality (trajectory gates and flight path.) Visually demonstrates EKF solution, flight control loops, autopilot performance. Visually demonstrates EKF roll/pitch correction -> truth.

Fully autonomous landing (outside view)

Here is the same fully autonomous landing shown in the nose cam footage w/ hud but from a ground based perspective.