Image Stitching Tutorial Part #2: Direct Georeferencing

What is Direct Georeferencing?

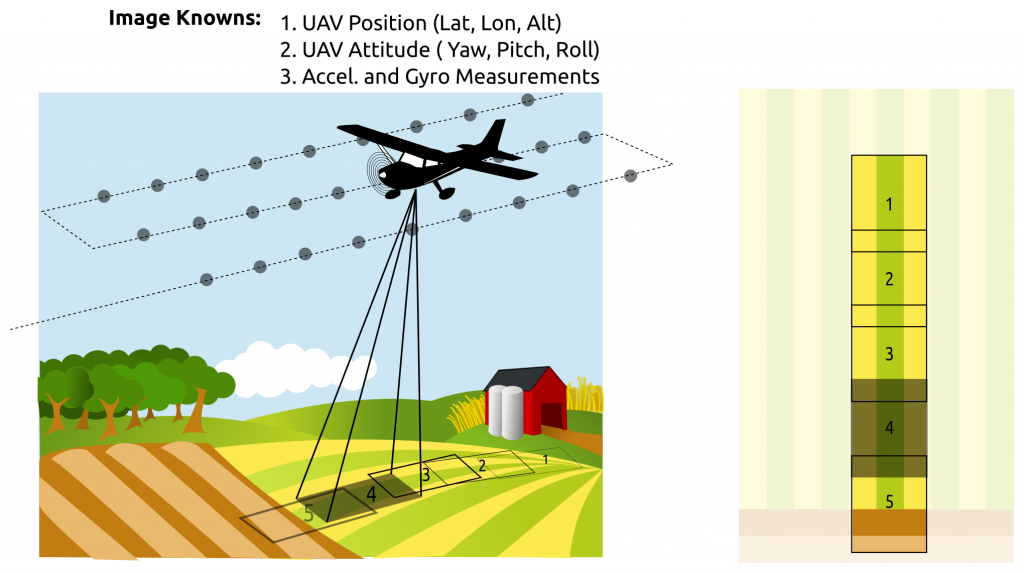

The process of mapping a pixel in an image to a real world latitude, longitude, and altitude is called georeferencing. When UAS flight data is tightly integrated with the camera and imagery data, the aerial imagery can be directly placed and oriented on a map. Any image feature can be directly located. All of this can be done without needing to feature detect, feature match, and image stitch. The promise of “direct georeferencing” is the ability to provide useful maps and actionable data immediately after the conclusion of a flight.

Typically a collection of overlapping images (possibly tagged with the location of the camera when the image was snapped) are stitched together to form a mosaic. Ground control points can be surveyed and located in the mosaic to georectify the entire image set. However the process of stitching imagery together can be very time consuming and specific images sets can fail to stitch well for a variety of reasons.

If a good estimate for the location and orientation of the camera is available for each picture, and if a good estimate of the ground surface is available, the tedious process of image stitching can be skipped and image features can be georeferenced directly.

Where is Direct Georeferencing Suitable for use?

There are a number of reasons to skip the traditional image stitching pipeline and use direct georeferencing. I list a few here, but if the readers can think of other use cases, I would love to hear your thoughts and expand this list!

- Any situation where we are willing to trade a seamless ‘nice’ looking mosaic for fast results. i.e. a farmer in a field that would like to make a same-day decision rather than wait for a day or two for image stitching results to be computed.

- Surveys or counting. One use case I have worked on is marine surveys. In this use case the imagery is collected over open-ocean with no stable features to stitch. Instead we were more interested on finding things that were not water and getting a quick but accurate location estimate for them. Farmers might want to count livestock, land managers might want to locate dead trees, researchers might want to count bird populations on a remote island.

How can Direct Georeferencing Improve the Traditional Image Stitching Pipeline?

There are some existing commercial image stitching applications that are very good at what they do. However, they are closed-source and don’t give up their secrets easily. Thus it is hard to do an apples-to-apples comparison with commercial tools to evaluate how (and how much) direct georeferencing can improve the traditional image stitching pipeline. With that in mind I will forge ahead and suggest several ways I believe direct georeferencing can improve the traditional methods:

- Direct georeferencing provides an initial 3d world coordinate estimate for every detected feature before any matching or stitching work is performed.

- The 3d world coordinates of features can be used to pre-filter match sets between images. When doing an n vs. n image compare to find matching image pairs, we can compare only images with feature sets that overlap in world coordinates, and then only compare the overlapping subset of features. This speeds up the feature matching process by reducing the number of individual feature comparisons. This increases the robustness of the feature matching process by reducing the number of potential similar features in the comparison set. And this helps find potential match pairs that other applications might miss.

- After an initial set of potential feature matches is found between a pair of images, these features must be further evaluated and filtered to remove false matches. There are a number of approaches for filtering out incorrect matches, but with direct georeferencing we can add real world proximity to our set of strategies for eliminating bad matches.

- Once the entire match set is computed for the entire image set, the 3d world coordinate for each matched feature can be further refined by averaging the estimate from each matching image together.

- When submitting point and camera data to a bundle adjustment algorithm, we can provide our positions already in 3d world coordinates. We don’t need to build up an initial estimate in some arbitrary camera coordinate system where each image’s features are positioned relative to neighbors. Instead we can start with a good initial guess for the location of all our features.

- When the bundle adjustment algorithm finishes. We can compare the new point location estimates against the original estimates and look for features that have moved implausibly far. This could be evidence of remaining outliers.

Throughout the image stitching pipeline, it is critical to create a set of image feature matches that link all the images together and cover the overlapping portions of each image pair as fully as possible. False matches can cause ugly imperfections in the final stitched result and they can cause the bundle adjustment algorithm to fail so it is critical to find a good set of feature matches. Direct georeferencing can improve the critical feature matching process.

What Information is Required for Direct Georeferencing?

- An accurate camera position and orientation for each image. This may require the flight data log from your autopilot and an accurate (sub second) time stamp when the image was snapped.

- An estimate of the ground elevation or terrain model.

- Knowledge of your camera’s lens calibration (“K” matrix.) This encompasses the field of view of your lens (sensor dimensions and focal length) as well as the sensor width and height in pixels.

- Knowledge of your camera’s lens distortion parameters. Action cameras like a gopro or mobius have significant lens distortion that must be accounted for.

Equipment to Avoid

I don’t intend this section to be negative, every tool has strengths and times when it is a good choice. However, it is important to understand what equipment choices works better and what choices may present challenges.

- Any camera that makes it difficult to snap a picture precisely (< 0.1 seconds) from the trigger request. Autofocus commercial cameras can introduce random latency between the camera trigger and the actual photo.

- Rolling shutter cameras. This is just about every commercial off the shelf camera sadly, but rolling shutter introduces warping into the image which can add uncertainty to the results. This can be partially mitigated by setting your camera to a very high shutter speed (i.e. 1/800 or 1/1000th of a second.)

- Cameras with slow shutter speeds or cameras that do not allow you to set your shutter speed or other parameters.

- Any camera mounted to an independent gimbal. A gimbal works nice for stable video, but if it separates the camera orientation from the aircraft orientation, then we can no longer use aircraft orientation to compute camera orientation.

- Any flight computer that doesn’t let you download a complete flight log that includes real world time stamp, latitude, longitude, altitude, roll, pitch, and yaw.

The important point I am attempting to communicate is that tight integration between the camera and the flight computer is an important aspect for direct georeferencing. Strapping a gimbaled action cam to a commercial quad-copter very likely will not allow you to extract all the information required for direct georeferencing.