Autopilot Visualization: Flight Track

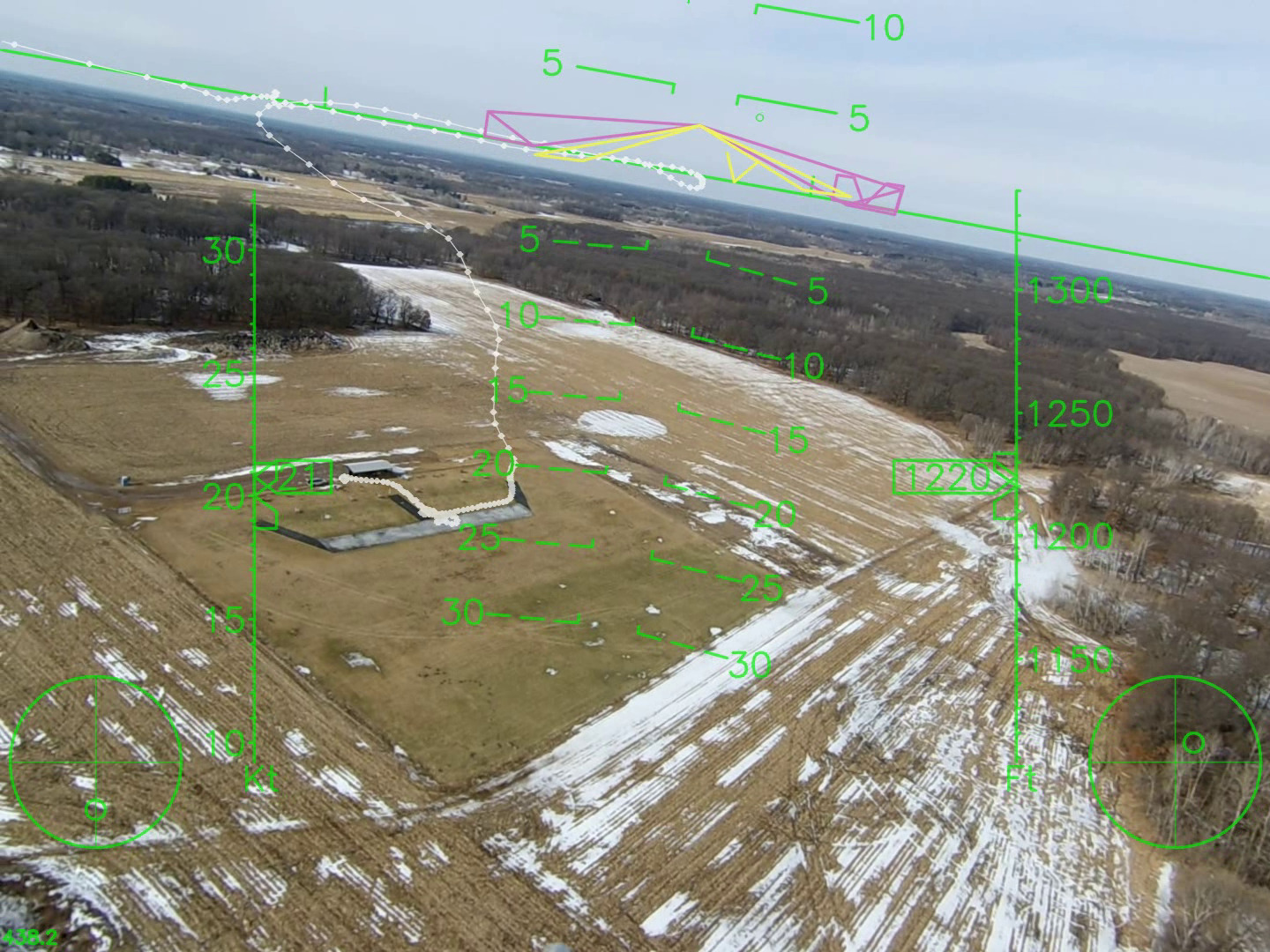

Circling back around to see our pre-flight, launch, and climb-out trajectory.

Circling back around to see our pre-flight, launch, and climb-out trajectory.

Augmented reality

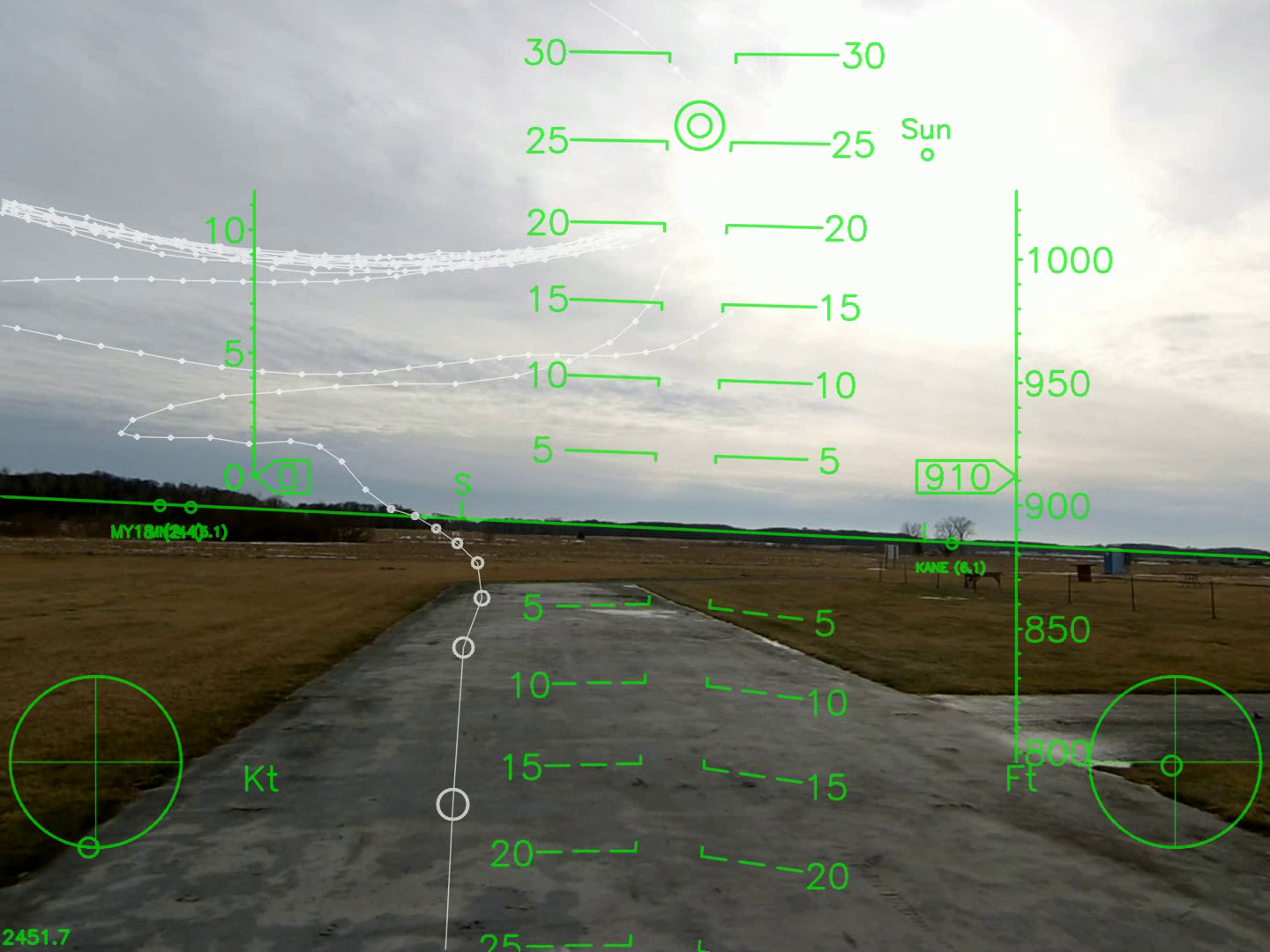

Everything in this post shows real imagery taken from a real camera from a real uav which is really in flight. Hopefully that is obvious, but I just want to point out I’m not cheating. However, with a bit of math and a bit of camera calibration work, and a fairly accurate EKF, we can start drawing the locations of things on top of our real camera view. These artificial objects appear to stay attached to the real world as we fly around and through them. This process isn’t perfected, but it is fun to share what I’ve been able to do so far.

Landing approach to touchdown with circle hold tracks in the background. Notice the sun location is correctly computed for location and time of day.

Landing approach to touchdown with circle hold tracks in the background. Notice the sun location is correctly computed for location and time of day.

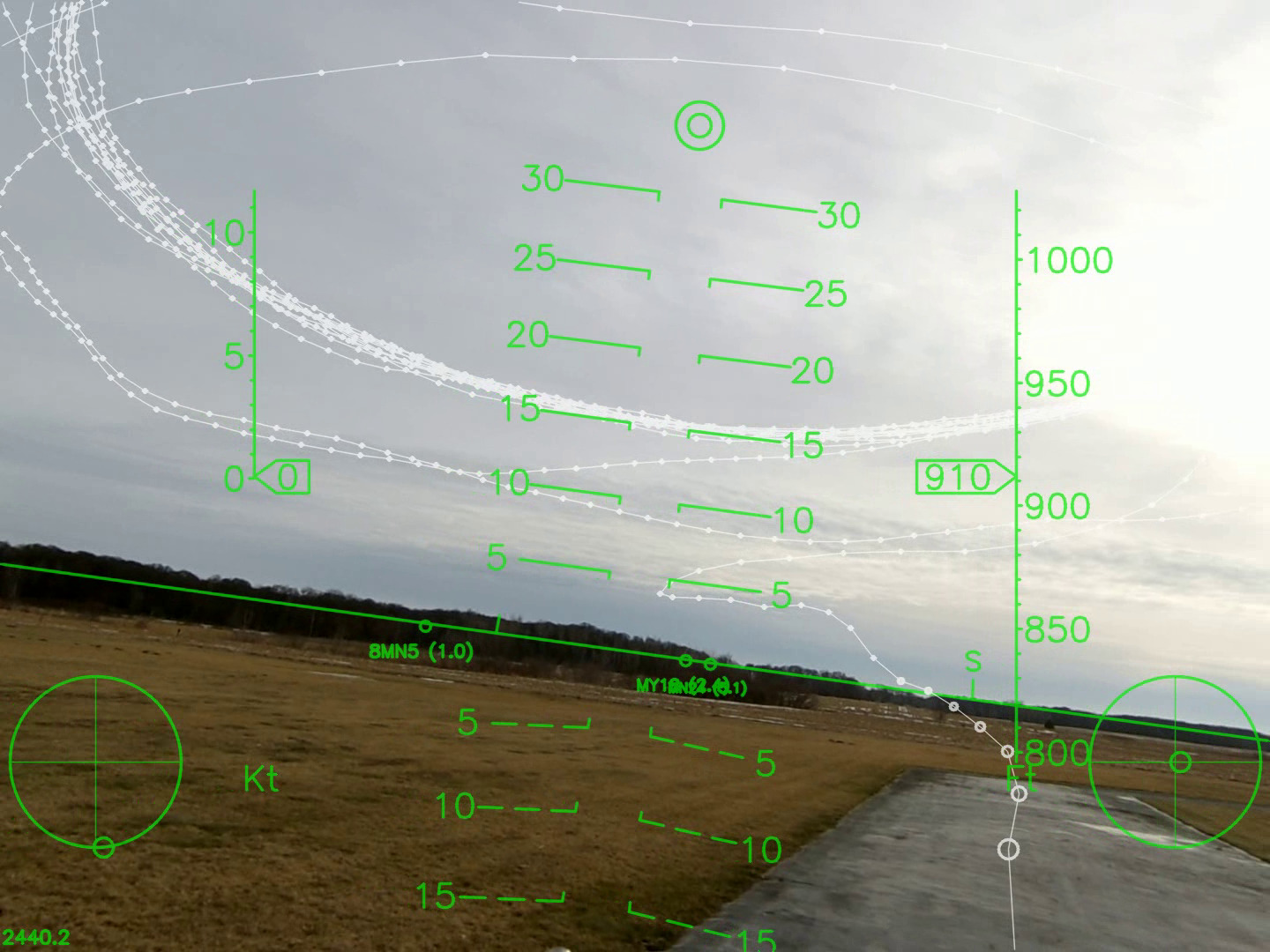

A similar view that better shows the circle hold that was flown along

with the landing approach.

A similar view that better shows the circle hold that was flown along

with the landing approach.

For the impatient

In this post I share 2 long videos. These are complete flights from start to finish. In them you can see the entire previous flight track whenever it comes into view. I don’t know how best to explain this, but watch the video and feel free to jump ahead into the middle of the flight. Hopefully you can see intuitively exactly what is going on.

Before you get totally bored, make sure to jump to the end of each video. After landing I pick up the aircraft and point the camera to the sky where I have been flying. There you can see my circles and landing approach drawn into the sky.

I think it’s pretty cool and it’s a pretty useful tool for seeing the accuracy and repeatability of a flight controller. I definitely have some gain tuning updates ready for my next time out flying based on what I observed in these videos.

The two videos

Additional notes and comments

- The autopilot flight controller shown here is built from a beaglebone blaock + mpu6000 + ublox8 + atmega2560.

- The autopilot is running the AuraUAS software (not one of the more popular and well known open-source autopilots.)

- The actual camera flown is a Runcam HD 2 in 1920×1440 @ 30 fps mode.

- The UAV is a Hobby King Skywalker.

- The software to post process the videos is all written in python + opencv and licensed under the MIT license. All you need is a video and a flight log and you can make these videos with your own flights.

- Aligning the flight data with the video is fully automatic (described in earlier posts here.) To summarize, I can compute the frame-to-frame motion in the video and automatically correlate that with the flight data log to find the exact alignment between video and flight data log.

- The video/data correlation process can also be used to geotag video frames … automatically … I don’t know … maybe to send them to some image stitching software.

If you have any questions or comments, I’d love to hear from you!