Automated Movie Frame Extracting and Geotagging

This is a short tutorial on an automated method to extract and geotag movie frames. One specific use case is that you have just flown a survey with your quad copter using a 2-axis gimbal pointing straight down, and a gopro action cam in movie mode. Now you’d like to create a stitched map from your data using tools like pix4d or agisoft.

The most interesting part of this article is the method I have developed to correlate the frame timing of a movie with the aircraft’s flight data log. This correlation process yields a result such that for any and every frame of the movie, I can find the exact corresponding time in the flight log, and for any time in the flight log, I can find the corresponding video frame. Once this relationship is established, it is a simple matter to walk though the flight log and pull frames based on the desired conditions (for example, grab a frame at some time interval, while above some altitude AGL, and only when oriented +/- 10 degrees from north or south.)

Video Analysis

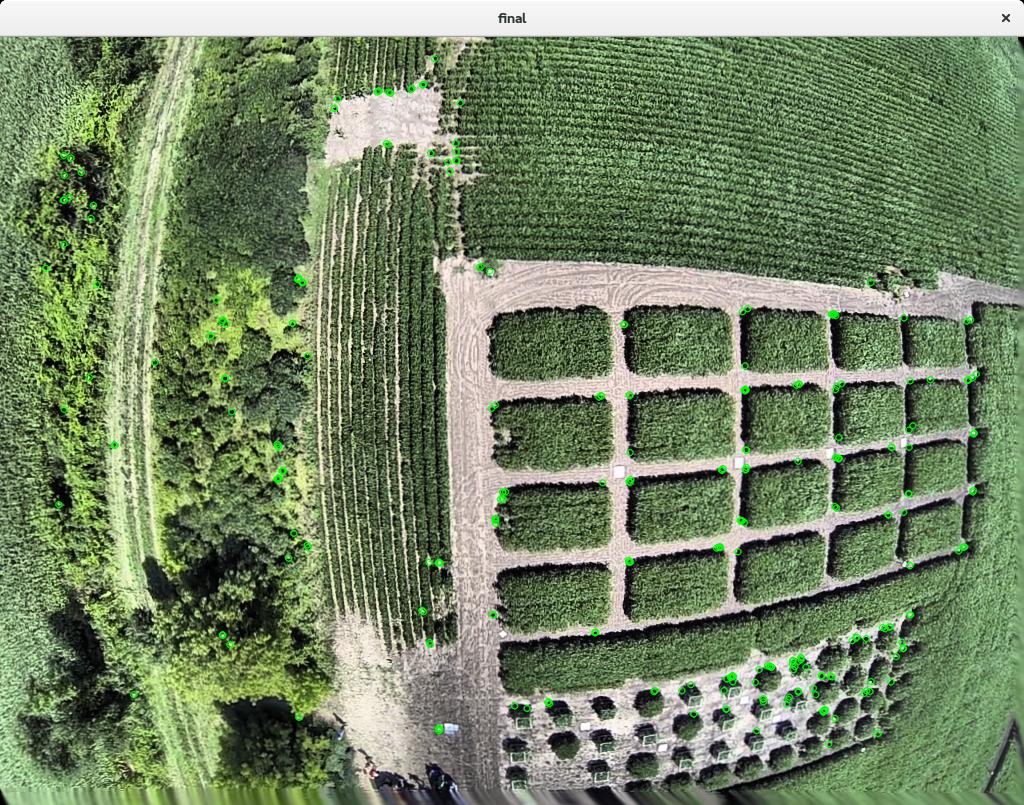

The first step of the process is to analyze the frame to frame motion in the video.

Example of feature detection in a single frame.

- For each video frame we run a feature detection algorithm (such as SIFT, SURF, Orb, etc.) and then compute the descriptors for each found feature.

- Find all the feature matches between frame “n-1” and frame “n”. This is done using standard FLANN matching, followed by a RANSAC based homography matrix solver, and then discarding outliers. This approach has a natural advantage of being able to ignore extraneous features from the prop or the nose of the aircraft because those features don’t fit into the overall consensus of the homography matrix.

- Given the set of matching features between frame “n-1” and frame “n”, I then compute a best fit rigid affine matrix transformation from the features locations (u, v) from one frame to the next. The affine transformation can be decomposed into a rotational component, a translation (x, y) component, and a scale component.

- Finally I log the frame number, frame time (starting at t=0.0 for the first frame), and the rotation (deg), x translation (pixels), and y translation (pixels.)

The cool, tricky observation

I haven’t seen anyone else do anything like this before, so I’ll pretend I’ve come up with something new and cool. I know there is never anything new under the sun, but it’s fun to rediscover things for oneself.

Use case #1: Iris quad copter with a two axis Tarot gimbal, and a go-pro hero 3 pointing straight down. Because the gimbal is only 2-axis, the camera tracks the yaw motion of the quad copter exactly. The camera is looking straight down, so camera roll rate should exactly match the quad copter’s yaw rate. I have shown I can compute the frame to frame roll rate using computer vision techniques, and we can save the iris flight log. If these two signal channels aren’t too noisy or biased relative to each other, perhaps we can find a way to correlate them and figure out the time offset.

3DR Iris + Tarot 2-axis gimbal

Use case #2: A Senior Telemaster fixed wing aircraft with a mobius action cam fixed to the airframe looking straight forward. In this example, camera roll should exactly correlate to aircraft roll. Camera x translation should map to aircraft yaw, and camera y translation should map to aircraft pitch.

Senior Telemaster with forward looking camera.

Senior Telemaster forward view.

In all cases this method requires that at least one of the camera axes is fixed relative to at least one of the aircraft axes. If you are running a 3 axis gimbal you are out of luck … but perhaps with this method in mind and a bit of ingenuity alternative methods could be devised to find matching points in the video versus the flight log.

Flight data correlation

This is the easy part. After processing the movie file, we now have a log of the frame to frame motion. We also have the flight log from the aircraft. Here are the steps to correlate the two data logs.

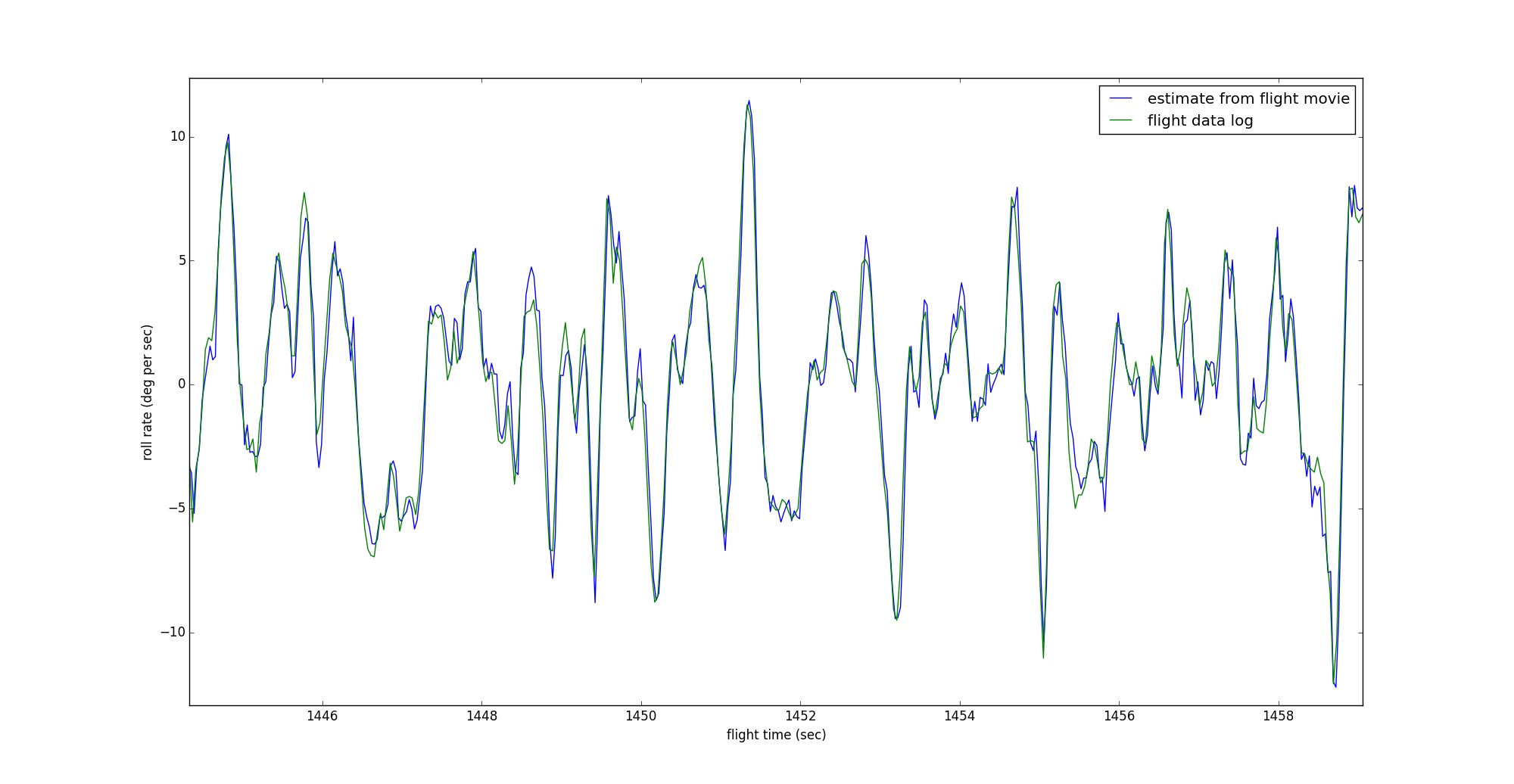

Correlated sensor data streams.

- Load both data logs (movie log and flight log) into a big array and resample the data at a consistent interval. I have found that resampling at 30hz seems to work well enough. I have experimented with fitting a spline curve through lower rate data to smooth it out. It makes the plots look prettier, but I’m sure does not improve the accuracy of the correlation.

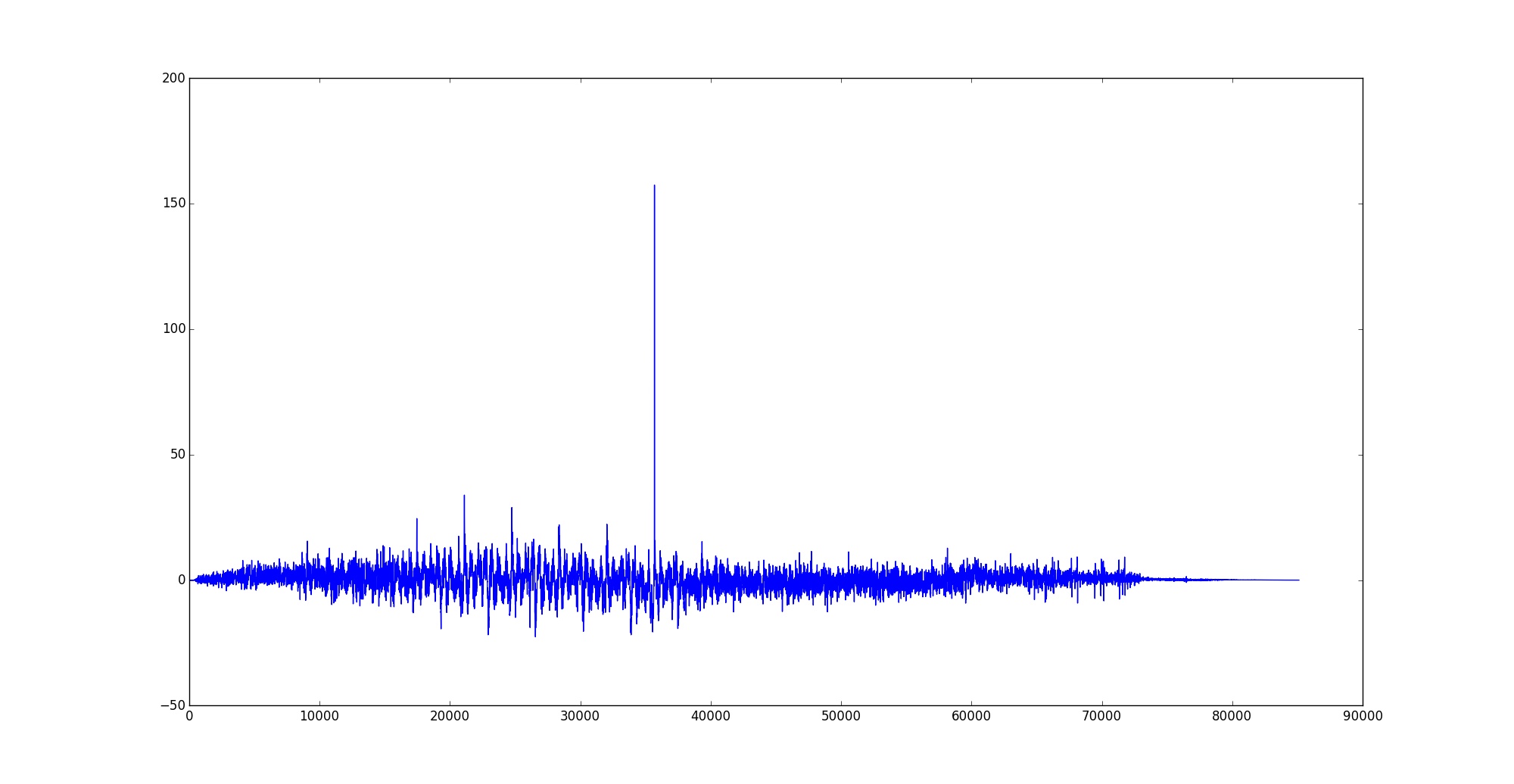

- I coded this process up in python. Luckily python (numpy) has a function that takes two time sequences as input and does brute force correlation. It slides the one data stream forward against the other data stream and computes a correlation value for every possibly overlap. This is why it is important to resample both data streams at the same fixed sample rate. <pre>ycorr = np.correlate(movie_interp[:,1], flight_interp[:,1], mode=’full’)</pre>

- When you plot out “ycorr”, you will hopefully see a spike in the plot and that should correspond to the best fit of the two data streams.

Plot of data overlay position vs. correlation.

Geotagging move frames

Raw Go-pro frame grab showing significant lens distortion. Latitude = 44.69231071, Longitude = -93.06131655, Altitude = 322.1578

The important result of the correlation step is that we have now determined the exact offset in seconds between the movie log and the flight log. We can use this to easily map a point in one data file to a point in the other data file.

Movie encoding formats are sequential and the compression algorithms require previous frames to generate the next frame. Thus the geotagging script steps through the movie file frame by frame and finds the point in the flight log data file that matches.

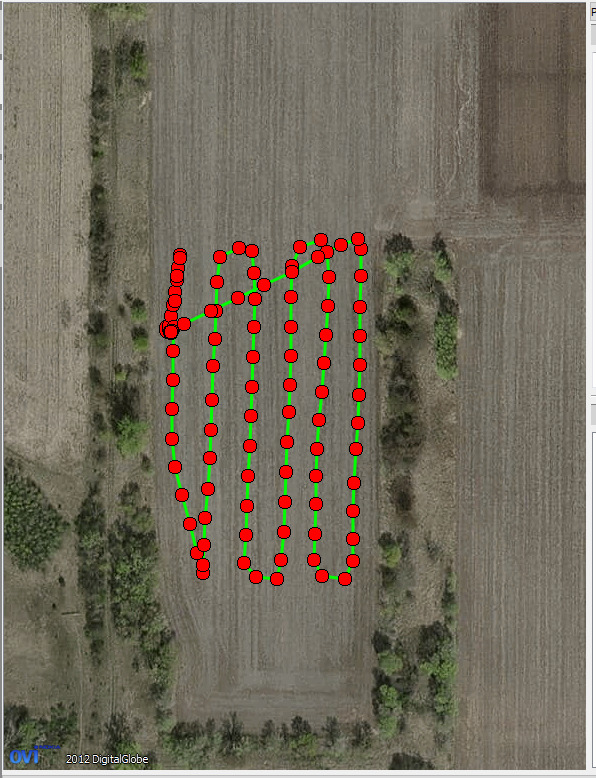

For each frame that matches the extraction conditions, it is a simple matter to lookup the corresponding longitude, latitude, and altitude from the flight log. My script provides an example of selecting movie frames based on conditions in the flight log. I know that the flight was planned so the transacts were flown North/South and the target altitude was about 40m AGL. I specifically coded the script to extract movie frames at a specified interval in seconds, but only consider frames taken when the quad copter was above 35m AGL and oriented within +/- 10 degrees of either North or South. The script is written in python so it could easily be adjusted for other constraints.

The script writes each selected frame to disk using the opencv imwrite() function, and then uses the python “pyexiv2” module to write the geotag information into the exif header for that image.

A screen grab from Pix4d showing the physical location of all the captured Go-pro movie frames.

Applications?

Aerial surveying and mapping

The initial use case for this code was to automate the process of extracting frames from a go-pro movie and geotagging them in preparation for handing the image set over to pix4d for stitching and mapping.

Final stitch result from 120 geotagged gopro movie frames.

Using video as a truth reference to analyze sensor quality

It is interesting to see how accurately the video roll rate corresponds to the IMU gyro roll rate (assume a forward looking camera now.) It is also interesting in plots to see how the two data streams track exactly for some periods of time, but diverge by some slowly varying bias for other periods of time. I believe this shows the variable bias of MEMS gyro sensors. It would be interesting to run down this path a little further and see if the bias correlates to g force in a coupled axis?

Visual odometry and real time mapping

Given feature detection and matching from one frame to the next, knowledge of the camera pose at each frame, opencv pnp() and triangulation() functions, and a working bundle adjuster … what could be done to map the surface or compute visual odometry during a gps outage?

Source Code

The source code for all my image analysis experimentation can be found at the University of Minnesota UAV Lab github page. It is distributed under the MIT open-source license:

https://github.com/UASLab/ImageAnalysis

Comments or questions?

I’d love to see your comments or questions in the comments section at the end of this page!