Adventures in Aerial Image Stitching

A small UAV + a camera = aerial pictures.

This is pretty cool just by itself. The above images are downsampled, but at full resolution you can pick out some pretty nice details. (Click on the following image to see the full/raw pixel resolution of the area.)

The next logical step of course is to stitch all these individual images together into a larger map. The questions are: What software is available to do image stitching? How well does it work? Are there free options? Do I need to explore developing my own software tool set?

Expectations

Various aerial imaging sites have set the bar at near visual perfection. When we look at google maps (for example), the edges of runways and roads are exactly straight, and it is almost impossible to find any visible seam or anomaly in their data set. However, it is well known that google imagery can be several meters off from it’s true position, especially away from well travelled areas. Also, their imagery can be a bit dated and is lower resolution than we can achieve with our own cameras … these are the reasons we might want to fly a camera and get more detailed, more current , and perhaps more accurately placed imagery.

Goals

Of course the first goal is to meet our expectations. 🙂 I am very adverse to grueling manual stitching processes, so the second goal is to develop a highly automated process with minimal manual intervention needed. A third goal is to be able to present the data in a way that is useful and manageable to the end user.

Attempt #1: Hugin

Hugin is a free/open-source image stitching tool. It appears to be well developed, very capable, and supports a wide variety of stitching and projection modes. At it’s core it uses SIFT to identify features and create a set of keypoints. It then builds a KD tree and uses fastest nearest neighbor to find matching features between image pairs. This is pretty state of the art stuff as far as my investigation into this field has shown.

Unfortunately I could not find a way to make hugin deal with a set of pictures taken mostly straight down and from a moving camera position. Hugin seems to be optimized for personal panormas … the sort of pictures you would take from the top of a mountain when just one shot can’t capture the full vista. Stitching aerial images together involves a moving camera vantage point and this seems to confuse all of hugin’s built in assumptions.

I couldn’t find a way to coax hugin into doing the task. If you know how to make this work with hugin, please let me know! Send me an email or comment at the bottom of this post!

Attempt #2: flightriot.com + Visual SFM + CMPMVS

Someone suggested I checkout flightriot.com. This looks like a great resource and they have outlined a processing path using a number of free or open-source tools.

Unfortunately I came up short with this tool path as well. From the pictures and docs I could find on these software packages, it appears that the primary goal of this site (and referenced software packages) is to create a 3d surface model from the aerial pictures. This is a really cool thing to see when it works, but it’s not the direction I am going with my work. I’m more interested in building top down maps.

Am I missing something here? Can this software be used to stitch photos together into larger seamless aerial maps? Please let me know!

Attempt #3: Microsoft ICE (Image Composite Editor)

Ok, now we are getting somewhere. MS ICE is a slick program. It’s highly automated to the point of not even offering much ability for user intervention. You simply throw a pile of pictures at it, and it finds keypoint matches, and tries to stitch a panorama together for you. It’s easy to use, and does some nice work. However, it does not take any geo information into consideration. As it fits images together you can see evidence of progressively increased scale and orientation distortion. It has trouble getting all the edges to line up just right, and occasionally it fits an image into a completely wrong spot. But it does feather the edges of the seams so the final result has a nice look to it. Here is an example. (Click the image for a larger version.)

The result is rotated about 180 degrees off, and the scale at the top is grossly exaggerated compared to the scale at the bottom of the image. If you look closely, it has a lot of trouble matching up the straight line edges in the image. So ICE does a commendable job for what I’ve given it, but I’m still way short of my goal.

Here is another image set stitched with ICE. You can see it does a better job avoiding progressive scaling errors on this set. However, linear features still are crooked, there are many visual discontinuities, and it one spot it has completely bungled the fit and inserted a fragment completely wrong. So it still falls pretty short of my goal of making a perfectly scaled, positioned, and seamless map that would be useful for science.

Attempt #4: Write my own stitching software

How hard could it be … ? 😉

- Find the features/keypoints in all the images.

- Compute a descriptor for each keypoint.

- Match keypoint descriptors between all possible pairs of images.

- Filter out bad matches.

- Transform each image so that it’s keypoint position matches exactly (maybe closely? maybe roughly on the same planet as ….?) that same keypoint as it is found in all other matching images.

I do have an advantage I haven’t mentioned until now: I have pretty accurate knowledge of where the camera was when the image was taken, including the roll, pitch, and yaw (“true” heading). I am running a 15-state kalman filter that estimates attitude from the gps + inertials. Thus it converges to “true” heading, not magnetic heading, not ground track, but true orientation. Knowing true heading is critically important for accurately projecting images into map space.

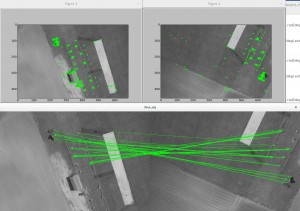

The following image shows the OpenCV “ORB” feature detector in action along with the feature matching between two images.

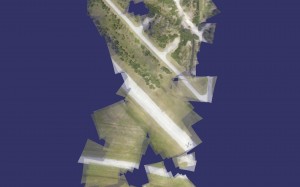

Compare the following fit to the first ICE fit above. You can see a myriad of tiny discrepancies. I’ve made no attempt to feather the edges of the seams, and in fact I’m drawing every image in the data set using partial translucency. But this fit does a pretty good job at preserving overall all geographically correct scale, position, and orientation of all the individual images.

Here is a second data set taken of the same area. This corresponds to the second ICE picture above. Hopefully you can see that straight line edges, orientations, and scaling is better preserved.

Perhaps you might also notice that because my own software tool set understands the camera location when the image is taken, the projection of the image into map space is more accurately warped (none of the images have straight edge lines.)

Do you have any thoughts, ideas, or suggestions?

This is my first adventure with image stitching and feature matching. I am not pretending to be an expert here. The purpose of this post is to hopefully get some feedback from people who have been down this road before and perhaps found a better or different path through. I’m sure I’ve missed some good tools, and good ideas that would improve the final result. I would love to hear your comments and suggestions and experiences. Can I make one of these data sets available to experiment with?

To be continued….

Expect updates, edits, and additions to this posting as I continue to chew on this subject matter.